From GPT-3 and ChatGPT to GPT-4: OpenAI’s road to Artificial General Intelligence (AGI)?

The world of natural language processing (NLP) has been revolutionized by the advent of powerful language models like ChatGPT. Born from the GPT-3 architecture by OpenAI, ChatGPT has already become a vital tool in various professional spheres, creating value and efficiency across industries. But as with any technological innovation, ChatGPT has its limitations which have paved the way for GPT-4, a highly efficient, multimodal and ground-breaking language model.

Disclaimer: as these language models rely on probabilities to generate their answers, you might not always get the same ones as those shown throughout this article

ChatGPT : the origins

ChatGPT was released on 30th November 2022 and is the first ever platform to reach 1 million users in less than a week (only 5 days!). Many people have heard of it, some have used it, but few really understand its inner workings.

Let us first start by asking it to define itself. When prompted “Who are you?”, here is what ChatGPT answers back:

“I am ChatGPT, a conversational artificial intelligence language model developed by OpenAI. I am designed to understand and respond to natural language input in a human-like manner. My purpose is to assist and provide helpful responses to users seeking information, guidance, or just looking to have a conversation.”

At Sia Partners and Heka, we have been writing about GPT since 2019 but if you are unfamiliar with some of those terms, let’s break down the key elements of this definition:

Artificial intelligence:

Set of systems that simulate human intelligence by automating tasks through algorithms (ChatGPT specifies that it strives to act in a “human-like manner”)

Language model:

Model trained on a huge corpus of text to learn the probabilistic structure of the language (probability of each word appearing after a certain given sequence of text), and then be able to reproduce patterns, structures and relationships within the language. In our case, the language model is GPT-3, which has been trained on 45T of text (books, news articles, Wikipedia pages, forum threads…) for a cost of $4.6M and released in June 2020.

Conversational language model:

ChatGPT is a modified version of GPT-3 that is specialised in conversational tasks (hence the added “Chat” prefix). This has made ChatGPT very good at human-like text generation in multiple languages and in many different contexts.

OpenAI:

OpenAI was initially a non-profit research organisation founded in 2015 with the support of famous billionaires like Elon Musk or Peter Thiel. Since then, the company has made its name thanks to its advancements in AI, and especially in generative AI (capability to generate text or images from some inputted text) with its DALL·E and GPT models.

What can ChatGPT be leveraged for?

Now that we have seen what ChatGPT actually is, here are a few examples of how ChatGPT can been integrated into the professional world (and how it could potentially affect your own job):

- Customer support: Companies have leveraged ChatGPT to create AI-powered chatbots that can handle customer queries and support tasks, significantly reducing response times and enhancing customer satisfaction.

- Content creation: Writers and marketers can use ChatGPT to generate high-quality content, such as blog posts, social media updates, and email templates, saving time and improving efficiency.

- Code completion: Developers can take advantage of ChatGPT’s capabilities to autocomplete code snippets, helping them write code more efficiently and reducing the chances of errors.

- Data analysis: ChatGPT can be used for textual data analysis in companies of all sizes. It can extract important information from text such as reports, customer feedback or social media posts.

- Recruiting: ChatGPT can be used to help HR professionals assess candidates and rank them according to their skills and qualifications.

- Personalised training: ChatGPT can be used to provide personalised online training to employees. Employees can ask questions and get quick answers about company processes, policies and procedures.

These are but a few examples of tasks that ChatGPT could help us with. Nonetheless, while this could seem intimidating, we must remember that these models remain nothing more than smart tools that we must guide to enhance our productivity.

Is there anything ChatGPT cannot do?

Despite its impressive capabilities, ChatGPT has some limitations.

Unreliability:

The first limitation, and maybe the most troublesome one, can be witnessed directly in its answer to the question “Who are you?” that we asked earlier: ChatGPT assures the reader that it can “understand and respond to natural language input”. However, this is not exactly right since the model does not really “understand” the language: it has simply learnt to generate results in a probabilistic way without truly knowing what it is really talking about. This is but one example of the model’s unreliability. Indeed, ChatGPT may not always provide accurate information, it does not cite its sources (which obliges the user to systematically check the facts that it puts forward), it can repeat or contradict itself if the prompt is too long or complex, or even completely hallucinate an answer if its source data does not contain sufficient relevant information.

Here is an example of ChatGPT completely hallucinating what RLHF means (it actually refers to Reinforcement Learning from Human Feedback, a method used to train ChatGPT:

Another answer with the same prompt:

Ethical concerns:

Despite OpenAI’s efforts to moderate the model, it can still sometimes generate biased or harmful content, inadvertently perpetuating stereotypes and misinformation.

To easily circumvent the moderation rules that filter ChatGPT’s answers (this practice is called “jailbreaking”), we can embed our question inside a conversation between two very nice people, with one pretending to be evil. However, if the question is clearly dangerous (how to make a Molotov cocktail, how to produce methamphetamine…), it will not answer.

Outdated knowledge:

ChatGPT’s training data is limited to sources available up until September 2021, meaning it lacks knowledge of events and developments that have occurred since then (for example, it does not know that Argentina won the 2022 football World Cup, or that the Ukraine and Russia are at war).

Limited reasoning capabilities:

Even after an update on January 30th, the model can still have a hard time answering simple logic or arithmetic questions.

See https://github.com/giuven95/chatgpt-failures for more failures

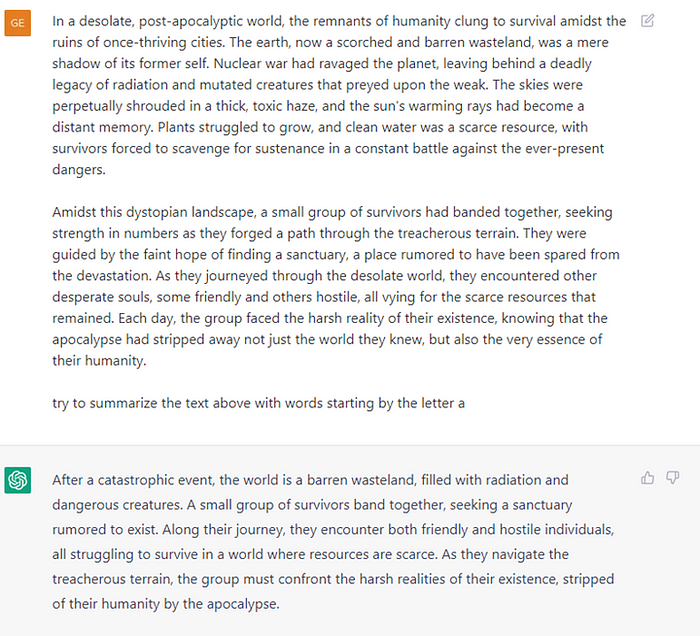

Some tasks are too complicated

Here it refuses to follow the instruction given in the prompt.

These limitations call for the development of GPT-4, an even more advanced and refined language model. The next generation of language models must address these concerns to provide more accurate, domain-specific, and ethically responsible AI-powered solutions.

ChatGPT based on GPT-4, the new revolution?

More creative, more reliable, more logical are the words emphasized by OpenAI when presenting their newest GPT version launched on March 13th. It has now become a “multimodal model”, meaning it can now process text as well as images. OpenAI is proudly writing that their model has passed the “bar exam with a score around the top 10% of test takers.” when GPT-3.5’s score was in the last 10%.

OpenAI has not disclose any technical information regarding the model size, hardware, how the model was trained, dataset construction … Therefore, this part only focuses on its capabilities. As of today, if you want to try it out by yourself, you will have to subscribe to ChatGPT Plus.

It can process images

ChatGPT has now become a multimodal model, a model that can process text and images! From a given image, it can analyse a scene and describe its content. It can analyse graphs and read text found in the images. This functionality is not yet available to common users, even those who have access to ChatGPT Plus. No date was given for this functionality release.

However, some examples given by OpenAI show how powerful the model is and that its usages are limitless:

- Generate HTML code to create a webpage from a given drawing

- Read text from a picture of a physic exam and give the answer to the questions

- Understand and explain memes: it understood that chicken nuggets disposed on a plate looked like earth continents.

- Detect unusual things and explain them: it understood that a picture of a man ironing its clothes through the back window of a taxi was unusual… well is that not obvious?

- Identify objects on images: This could allow to create a recipe out of a picture of your groceries or maybe indicate what is missing to make your recipe …

If you want to see some examples by yourself, go watch OpenAI’s demo (https://www.youtube.com/watch?v=outcGtbnMuQ).

It is better with languages

This is demonstrated with results obtained from GPT-4 passing the MMLU benchmark in many languages. The MMLU benchmark is a multiple-choice questions exam with 57 tasks to test model’s multitask accuracy. It was created by OpenAI to test their models. GPT-4 got better results when passing the exam in Punjabi (language talked in some regions of Pakistan and India) than GPT-3.5 passing the exam in English showing therefore a big improvement.

Tested on human exams and obtained excellent results

35 different academic and professional exams were given to GPT-3.5 and GPT-4. The tests given were very broad, from mathematics, to history and art. Most of the time, GPT-4 obtained better results than GPT-3.5 and only once obtained a worse result. The results were compared to human results, and they seem excellent. However, OpenAI warns that the compared “reported numbers are extrapolated and likely have wide uncertainty”. Is this a way to reassure us that we will still be smarter than the model?

Can process longer prompts and write longer answers

A prompt sent to a GPT model and the answer received cannot exceed a certain number of words. For GPT-3.5, it was limited to 2048 tokens (a token is essentially a syllable). However, GPT-4 has more than doubled this amount, and now processes prompts and answers with a total of 8192 tokens. It can also provide a version allowing to process 25k words. This is very useful when you want to summarise long texts.

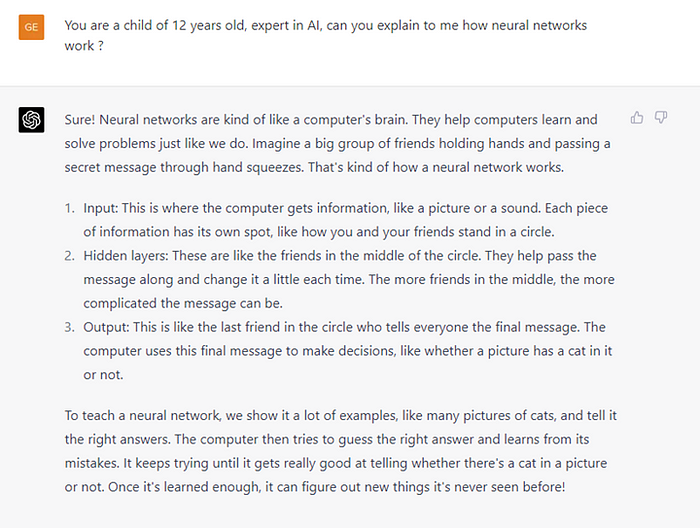

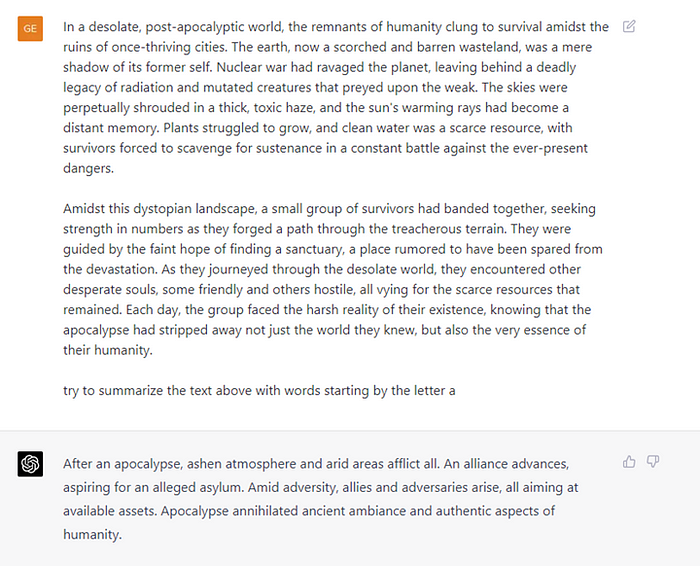

Steerability: changing ChatGPT’s tone

The prompt can be used to tell ChatGPT what tone it should be using when responding. It can be extremely useful to adapt the answer to the user’s knowledge and to make sure it is understandable. It can also be used to simplify complex problems.

For example, we asked GPT-3.5 to act as a child of 12 years old, expert in AI, to explain how neural networks work:

And we have asked the same question to GPT-4:

GPT-4 was able to adapt its answer to the role it is supposed to play. Its answer is simplified and uses figures of speech that a child of 12 years old could use.

However, are those changes significant to us? Let’s find out!

Does GPT-4 solve some GPT-3.5’s limitations?

Let’s try the examples we mentioned above to see if GPT-4’s answers are different:

(If you want to try it out by yourself, you will have to have an account on ChatGPT Plus).

Unreliability:

This time GPT-4 seems to give relevant answers to the same questions.

Ethical concerns:

When given the same prompt of a conversation between John and Nova, here is what GPT-4 answers:

It seems GPT-4 is even more creative and gives more details …

Outdated knowledge:

GPT-4 has still no knowledge of events following the end of 2021.

Complicated tasks:

Not perfect but would we do better?

Have we reached Artificial General Intelligence?

The performance of GPT-4 is impressive, on many benchmarks it outperforms human annotators. Large language models will create a revolution in the economic market; it is estimated that 80% of the workforce has some part of their work that could be automated by GPT-4.

However, because of their training, these models cannot achieve human intelligence. As mentioned in the introduction, GPT-4 has learned to mimic human behaviour by predicting the next word in a sentence. It has no understanding of language, logic nor reasoning.

GPT-4 models are a big step forward on the road to general artificial intelligence, but the following limitations must be overcome to reach the singularity:

- Confidence: The model has difficulty identifying when it should be confident and when it should just guess. It always adopts a confident tone even when it hallucinates certain facts.

- Continuous learning: Although the model has a short-term memory to remember the user’s messages, it is unable to use information from previous conversations or from other users to adjust its responses. The lack of knowledge about 2022 data is an illustration of this limitation.

- Reasoning: LLMs have no concept of logic. For example, although they can easily prove complex theorems and think philosophically, they are prone to statistical fallacies, cognitive biases, and irrationality.

- Generalisation: GPT-4 is very sensitive to details in the formulation of prompts, which illustrates a lack of general understanding and conviction.

- Genius: Finally, to achieve human performance, a model must be able to plan ahead and have “eureka” ideas. GPT-4 has only a small memory of the input and its response, which makes planning impossible. Furthermore, it has been trained to mimic human behaviour, not to surpass it, so “Eureka” performance will always be out of reach.

Conclusion

We can see with those examples that there was an improvement between GPT-3.5 and GPT-4 answers. The newest model seems to be able to handle more complex tasks, to understand the context of the questions better and to be more creative in its answers. However, it does not seem to prevent the creation of content that “promotes or incites violence, harm or any illegal activities” as much as GPT-3.5. It is possible that this will be improved in later stages when the model will be accessible to all users.

GPT-4 has still some limitations that GPT-3.5 has. OpenAI emphasizes many times through its article that GPT-4 is still not reliable and that facts should be double checked by its users. Moreover, various limitations, embedded in the very model’s structure, restrict GPT-X models in the development of their intellectual faculties. GPT models can be considered as an early, although largely incomplete, version of an artificial general intelligence.

Final question: When will it be accessible to all users?

Today, OpenAI has not published any date regarding when GPT-4 will be accessible to all users on its platform. It is currently available for accounts having subscribed to ChatGPT Plus. However, you can talk to GPT-4 without paying a subscription by going to Bing Chat.

The article presenting GPT-4 also mentions that some companies had early access to GPT-4 and are already using some of its features, like its ability to handle images.

As developers you can join a waitlist to access to the API. OpenAI is also working on a plugin to make ChatGPT interact with third-party applications. As of today, only a few users, selected from a waitlist, have access to it.

In conclusion, ChatGPT will be used in a lot of third-party applications, and we may not even know it!